Experiments App

Enable rapid iteration on pre-production computer vision models with an app that helps manage the deep learning process.

Context

A couple of customers were interested in using Foundry to build and operationalize computer vision models. They rely heavily on Tensorboard to manage the deep learning process and didn’t see a valid replacement for it in Foundry. This effort started out as a stopgap solution to integrate Tensorboard, but quickly evolved into a first-class application as the demand for lightweight experimentation grew.

Project Structure

I partnered deeply with a senior engineer for the research, app development and design phases, and then brought in two other junior engineers to help build the app.

Target users:

Model developers using complex training techniques, like deep learning. Our main partners for this project were customers training computer vision models.

Signal:

→ Users need a lightweight space to train and experiment

Every one production model has dozens (if not hundreds!) of iterations behind it. It’s important to fail fast and keep the messy iterations separate from production-quality models, since most will be thrown away.

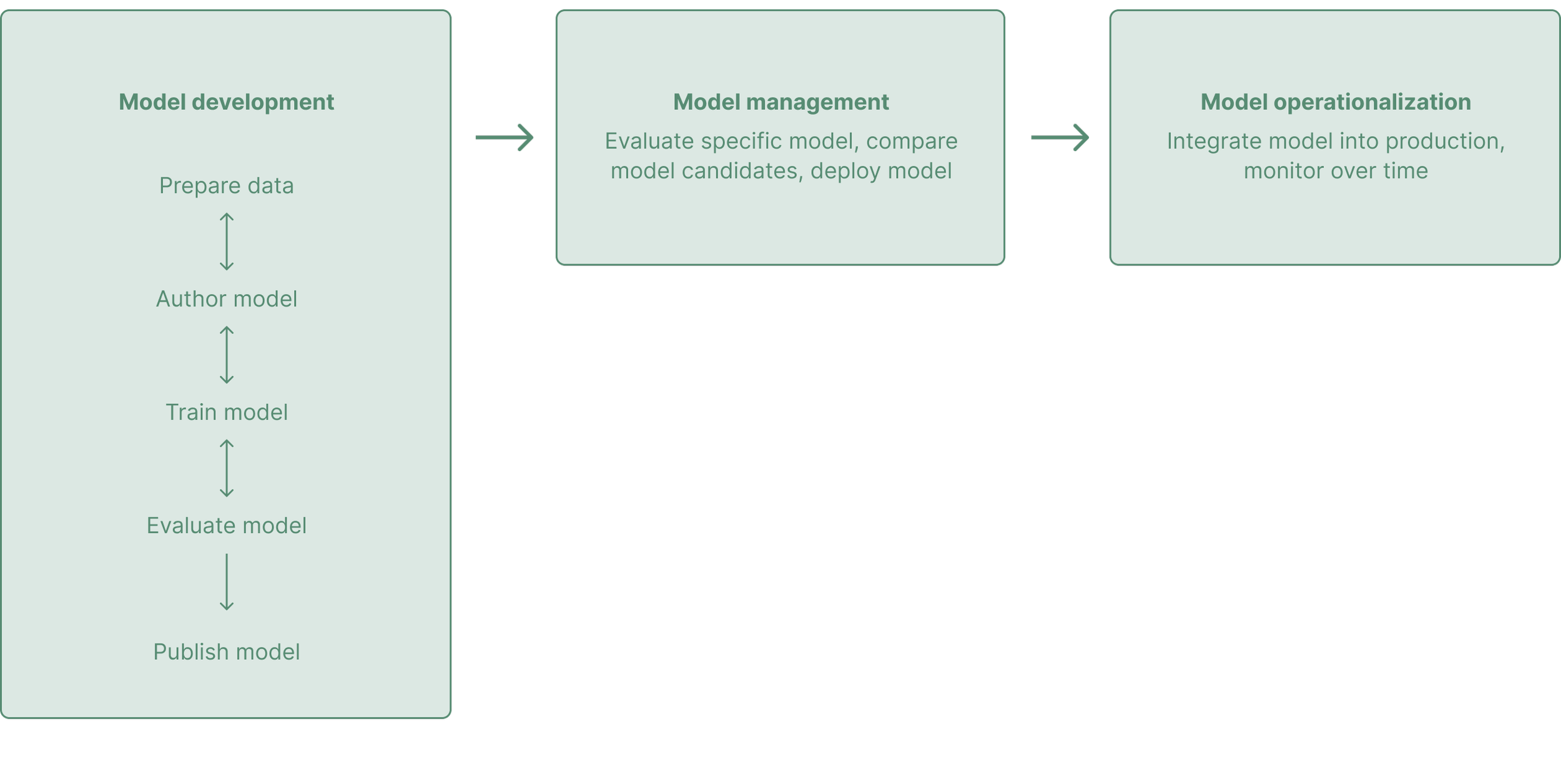

→ Customers can’t complete E2E modeling workflows in Foundry

Foundry’s modeling strategy has focused mostly on management and deployment so far, resulting in lackluster tools for model development. Customers are often turning to competitor tools like Tensorboard to manage model training instead.

User workflow:

Goal: Help users organize and evaluate early iterations in order to publish high-quality models.

⭐️ Organize

Help users organize and manage early model iterations in order to know what to try next. Facilitate collaboration by providing a central place to see what’s been tried.

📊 Evaluate

Help users compare different runs in order to the find the best one to publish.

🚀 Publish

Make it super easy to promote a run to a standardized, production-ready model.

Concepts

An experiment is a project that contains many runs, which is the industry-standard term for an early model iteration.

Eventually, the latest checkpoint of a run will be published as a production-ready model version.

Experiments app: Main screens

Experiments pane: Tracking training in-context

Model training happens in a code editor. Users execute training jobs that output “runs” containing the model and training data. Users can track training progress in the Experiments pane, which is a small version of the full Experiments app.

Once a user is ready to evaluate the results, they can open the Experiments app directly from this pane.

Experiments home page

The Experiment home page lists runs as cards so users can see high-level identifying information at a glance. There are a few card variations based on run type (see below ↓)

From here, users can also flip to the “training results” tab to compare run metrics, or the “output samples” tab to review actual model outputs.

Run card variations

Compare runs

The training results page helps users compare multiple runs across the same metrics. All runs are listed in the left pane, and charts appear on the right for any logged metrics. Users can toggle each run on and off, which will make its data appear on the relevant metric cards.

Click into run details + publish run as a model

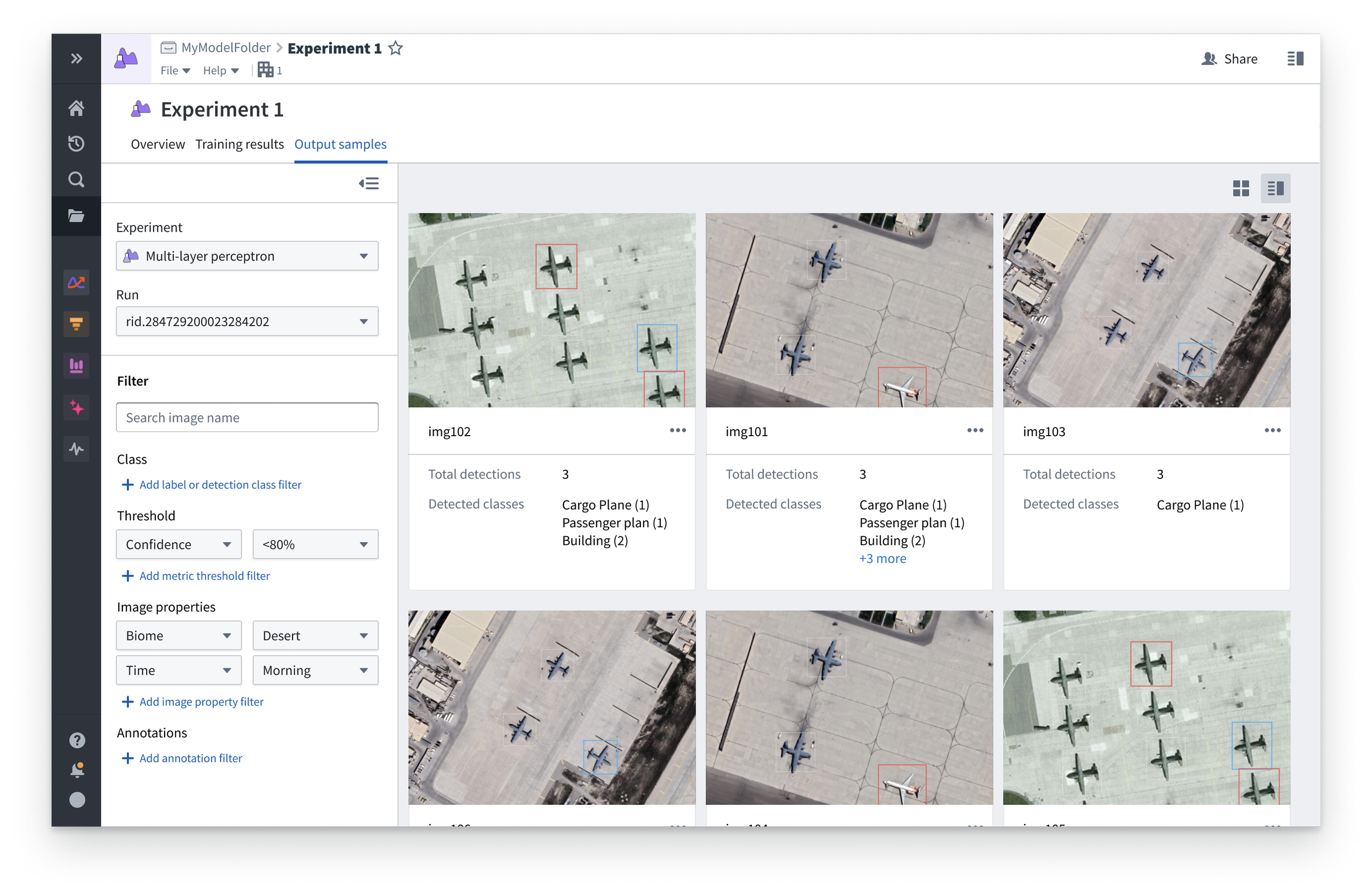

Output Samples

A rich experience to view, compare and correct a sample of output images from the training process.

User feedback

Users felt that the app was a comparable replacement for existing, competitor tools

These comparison views, along with Foundry’s security and other tooling, was enough for the customer to consider using this tooling instead of their existing systems.

A quality content/media viewer was critical

Users expressed a need for a robust image viewer that could do analysis on images in order to effectively evaluate them. There was also interest in expanding this to support other media types, like audio and text.

Users are excited about new + improved model development capabilities in Foundry

This quick, iteration-style model development is super enticing for model developers. It’s a critical piece of the puzzle that would help customers move to Foundry for model training.

App branding

To finish off the app, we needed a new app icon. I took the initiative to create one! It was important to match the other modeling resources (Modeling Objective and Model), so I started off exploring something to match the existing icons. We were overhauling both modeling objectives and the model resource at the same time, so this snowballed into a complete rebrand of the modeling ecosystem icons.

The new icons are simple, monochrome, work together as a system, and communicate the relationship between the three resources. The model shape is a minimal “M.” In a fun easter egg, it’s also the shape of the graph you might look at to find the local minima/maxima during model training!

Reflection

This was my first project on the modeling team, so jumping directly into designing for a complex process like deep learning was a challenge! I learned a lot working with my engineering partner, and quickly grew a lot of confidence in my ability to decomp technical spaces.

Next steps

This application is just one piece of the model-development process. Parallel ongoing efforts include rebuilding the model resource (see my Model Hub project) and integrating industry-preferred training apps like Jupyter notebooks. Once those projects go live, this application will start the process to become a public offering as well.